Data Privacy and Cybersecurity with Shane Morganstein

Shane Morganstein an Associate at BLG, hosted the sixth seminar on Data Privacy and Cybersecurity as part of the 2022-2023 IMI BIGDataAIHUB’s Seminar Series.

Currently, in Canada, we do not have “anything on the books” in terms of law involving artificial intelligence. However, Bill C-27 has been proposed at the federal level, which will overhaul privacy legislation that came into force in the early 2000s. The law has not kept pace with the times in terms of technological advancements. In Quebec, Bill 64 will be coming into force in September 2023. Bill 64 outlines requirements when using personal information to render a decision based exclusively on automated processing (s.12.1). Similarly, Bill C-27 provides requirements for the use of automated decision systems.

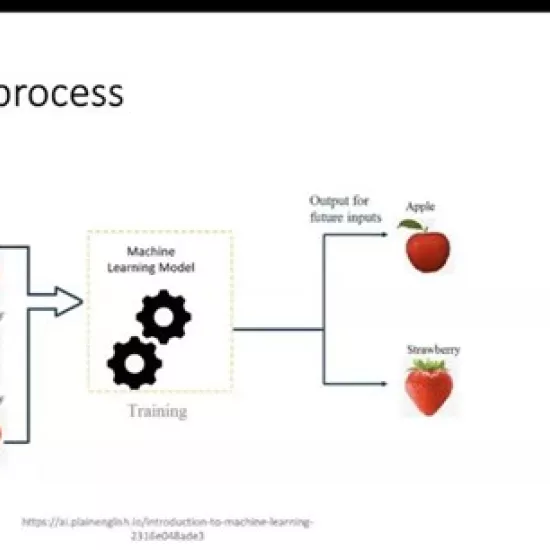

What is an Automated Decision?

It can be difficult to explain a decision made by AI. These decisions are often complex, non-linear relationships between inputs and outputs.

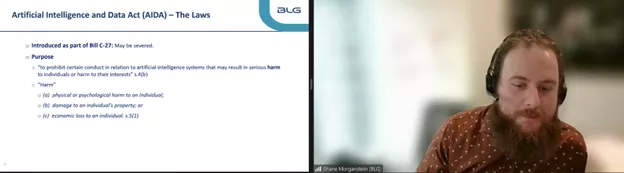

Artificial Intelligence and Data Act

Part three of Bill C-27 is the Artificial Intelligence and Data Act (AIDA). The purpose of AIDA is “to prohibit certain conduct in relation to artificial intelligence systems that may result in serious harm to individuals or harm to their interests” s.4(b). Here, harm is defined as:

- physical or psychological harm to an individual;

- damage to an individual’s property; or

- economic loss to an individual s. 5(1).

Shane Morganstein presenting Seminar 6 in the 2022-2023 IMI BIGDataAIHUB’s Seminar Series at the University of Toronto Mississauga. Topic: Data Privacy and Cybersecurity

AIDA defines artificial intelligence systems as “a technological system that, autonomously or partly autonomously processes data related to human activities through the use of a genetic algorithm, a neural network, machine learning or another technique in order to generate content or make decisions, recommendations or predictions” s. 2. Within the act, a person is responsible for an artificial intelligence system, if “in the course of international or interprovincial trade and commerce, they design, develop or make available for use the artificial intelligence system or manage its operation” 5(2).

Other obligations include of a responsible person include:

- Measures to identify, assess and mitigate risk of harm or biased output (s. 8)

- Measure to monitor compliance with mitigation measures (s. 9)

- Record keeping regarding measures (s. 10)

- Notify minister if use results or likely to result in material harm (s. 12)

- Publish plain language description of system (s.11(1)).

- Publish plain language description of how system is used (s. 11(2)).

What will improved AI and ML tools mean for Data Privacy and Cybersecurity?

1. Improved Cyber Attacks

These technologies will likely result in a higher quantity and quality of cyber attacks, such as phishing emails, fraudulent fund transfers, and ransom negotiations.

a) Phishing emails

With AI technology such as ChatGPT phishing email quality will improve by eliminating spelling errors and improving the language used. As a result, there will be more pervasive attacks by virtue of using this tool. Check out the short link to a YouTube video describing this here.

b) Fraudulent fund transfers

AI technology can also increase fraudulent fund transfers, where through impersonation someone creates a transfer in a jurisdiction where it is difficult to get the funds back.

c) Ransom Negotiations

AI technology can increase the propensity for ransom negotiations. This happens when a hacker locks up a system and steals data. Then a company is asked to pay a ransom to unlock their system. Here, the negotiation strategies can be tested easily with a learning model.

2. Novel attack vectors

Furthermore, AI technology could be used to overcome security tools, or look for holes in firewalls to infiltrate and penetrate a hostile network.

3. Intellectual Property

Increased dependency on AI will impact intellectual property claims. For instance, who owns the IP when you use Chat GPT? Individuals own their inputs, not OpenAI. What ChatGPT spits out belongs to the user.

Big Data in Incident Response

There are five steps to incident response practice, namely, contain, restore, investigate, mitigate, and close. Containing the incident starts with the disconnection of services. Restoring involves bringing the services back safely, which often relies on special software. Investigating the incident starts at time zero and proceeds with iterations, including speaking with the threat actor, and formal forensic investigation is often required. Mitigating the incident utilizes legal counsel guidance to address the impact, and may include reporting, sharing, and notifying. Finally, closing the incident involves looking at the cause and committing to improvements.

Mitigation through eDiscovery

A practical big data and AI application in cybersecurity is a legal obligation to notify individuals impacted by a cyber incident. This requires swaths of data to be analyzed with the goals of identifying impacted individuals, identifying impacted data, and identifying how to contact these impacted individuals. The solution can be big data and AI where automated reviews of datasets can be performed using machine learning techniques.